Containerization has become a popular concept in companies as it helps development teams move fast, deploy software efficiently and operate at unprecedented scale. With the rise of containerization, Kubernetes has successfully secured its position as a container-centric management software.

Without containerization, applications are tightly coupled to the underlying infrastructure, making it difficult to migrate them across different environments or scale them effectively. This leads to challenges in deployment, resource management, and maintenance, limiting agility and efficiency in software development and deployment.

At an annual growth rate of +127 percent, the number of Kubernetes clusters hosted in the cloud grew about five times as fast as clusters hosted on-premises. – Dynatrace

What is Kubernetes?

Kubernetes, also known as “K8s,” is an open-source container orchestration platform originally developed by Google. It offers a powerful solution for automating containerized application deployment, scaling and management.

One of the primary reasons for Kubernetes’ widespread adoption is its ability to address the challenges associated with deploying and managing complex, distributed applications. Traditionally, deploying applications at scale was complex and time-consuming. This involves meticulous configuration and coordination across multiple servers. Kubernetes simplifies this process by automating many deployment and management tasks, freeing developers to concentrate on coding rather than infrastructure intricacies.

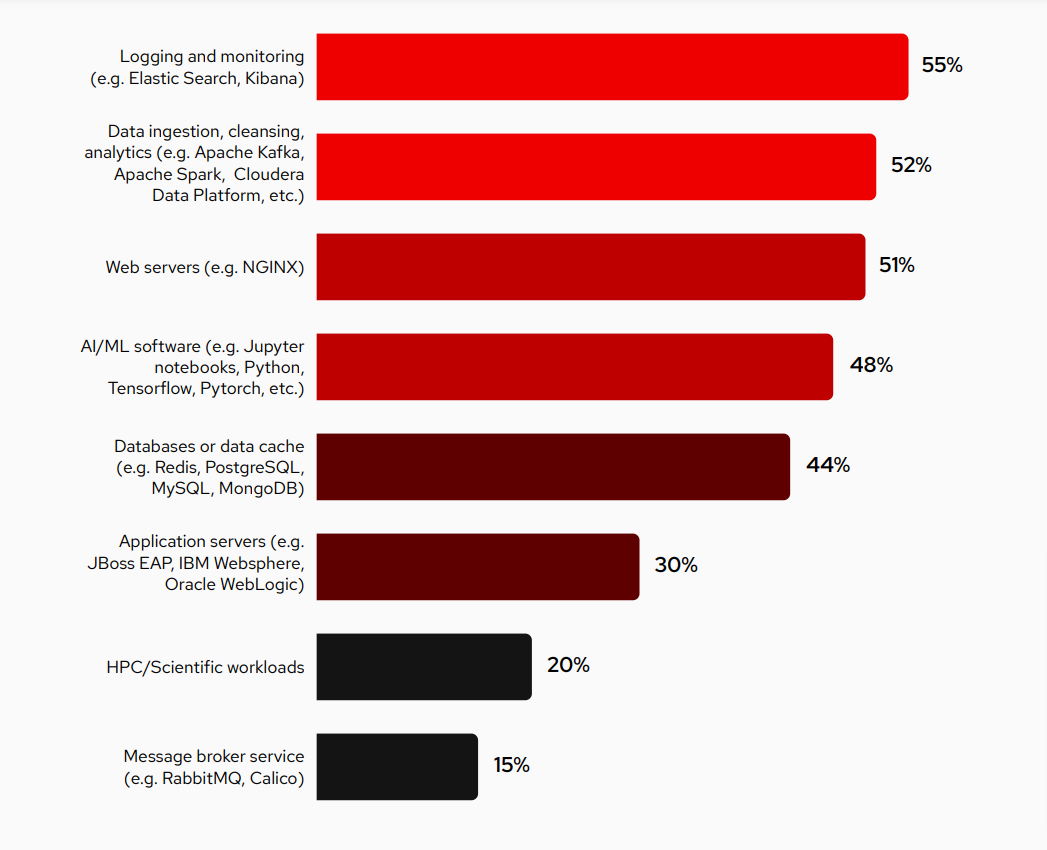

The Gartner Peer Community conducted a survey of 500 enterprise technology leaders. The survey revealed their adoption and benefits of deploying a diverse range of mission-critical workloads on Kubernetes.

Source: https://www.redhat.com

Kubernetes plays a transformative role in software development, empowering teams to streamline their development processes, enhance application scalability and embrace modern software architectures.

What features does Kubernetes offer?

Kubernetes distinguishes itself from other deployment solutions by providing organizations with a range of powerful features to effectively manage their containerized applications. Let’s explore the key features offered by Kubernetes serverless computing with Knative.

Kubernetes embraces the serverless paradigm through projects like Knative. Serverless computing is a cloud-native execution model. It makes applications easier to develop and cost-effective to run.

Knative extends Kubernetes to provide a serverless framework that simplifies serverless applications development, deployment and scaling.

Knative simplifies the process of deploying containerized applications in small, iterative steps, allowing developers to create and deploy new containers and versions quickly. It seamlessly integrates with automated CI/CD pipelines without the need for specialized software or custom programming.

1. Kubernetes multi-cluster management

Multi-cluster management refers to the practice of efficiently managing multiple Kubernetes clusters as a unified entity. It controlling and coordinating resources, workloads and configurations across multiple clusters. It enables organizations to scale their applications and infrastructure effectively. Benefits of multi-cluster Kubernetes include:

- Scalability: Kubernetes multi-cluster management enables seamless scaling of applications across multiple clusters, accommodating growing workloads and user demands.

- High availability: It ensures application availability by distributing workloads, implementing load balancing and automating failover for improved fault tolerance.

- Resource efficiency: Effective cluster management optimizes resource allocation, maximizing efficiency and cost savings.

- Centralized control: Cluster management provides a unified view of multiple clusters, simplifying administration, monitoring and deployment.

- Disaster recovery: It supports replication and backup mechanisms for rapid recovery in case of failures or disasters.

- Consistent configuration: Cluster management enforces consistent configurations and policies across clusters, enhancing compliance and reducing misconfigurations.

- Flexibility and portability: Kubernetes cluster management abstracts application workloads, enabling seamless migration and facilitating hybrid and multi-cloud deployments.

- Collaboration and efficiency: Cluster management promotes team collaboration, role-based access control and integration with DevOps practices for improved productivity.

2. Edge computing: Bringing Kubernetes to remote locations

Edge computing refers to the deployment of Kubernetes clusters at the network edge to enable localized data processing and application deployment.

Kubernetes nodes are worker machines that run containerized applications. It can be a virtual or physical machine within a cluster.

A Kubernetes cluster is a collection of nodes (physical or virtual machines) that collectively run containerized applications and are managed by the Kubernetes control plane.

Three Popular architecture options for deploying Kubernetes in edge computing

Cluster at each edge location:

Imagine you have multiple remote locations, like small offices or stores and you want to use Kubernetes to manage the applications running at each location. One option is to set up a complete Kubernetes cluster at each location. This means having a separate group of machines, either highly available or just a single server, dedicated to running Kubernetes. This approach offers you independence but also adds complexity to managing multiple clusters, requiring cloud provider services or custom tools for monitoring and management.

Single cluster with edge nodes:

Suppose you have a central data center where you set up a single Kubernetes cluster. Instead of deploying separate clusters at each remote location, you deploy small computing nodes at those locations. These nodes run the applications locally. This setup is suitable for resource-constrained devices as the control panel and other tasks are managed in the cloud. However, networking becomes a challenge, as data synchronization between the control plane and edge locations must be addressed.

Edge processing with low-resource devices:

Similar to the scenario mentioned above, you continue to have a central data center with a Kubernetes cluster. You deploy edge processing nodes at remote locations. However, instead of regular computing devices, you can use low-resource devices at the edge. These devices transfer data between the Kubernetes edge nodes and themselves for processing.

3. Security

Kubernetes also helps you address security challenges through its features. Here are some concerns that Kubernetes can address:

- Challenge: Containers can consist of vulnerabilities that can be exploited by attackers.

- Solution: Image scanning

- Challenge: Inadequate access controls

- Solution: Role-based access control (RBAC)

- Challenge: Cluster and network security

- Solution: Network policies

- Challenge: Insider threats

- Solution: Auditing and logging

- Challenge: Container breakouts

- Solution: Security contexts

- Challenge: Persistent storage security

- Solution: Secret management

Integration with container image scanning tools allows vulnerability detection and prevention of insecure images.

RBAC allows fine-grained control over user permissions and restricts access to resources based on roles and privileges.

It enables fine-grained control over network traffic within the cluster, ensuring secure communication.

Kubernetes supports auditing features, enabling the log collection for monitoring and forensic analysis.

Kubernetes allows users to define security contexts for pods. The goal is to limit their privileges and ensure that they adhere to security requirements, preventing container breakouts.

Kubernetes provides encryption mechanisms for securely storing and managing sensitive information, such as API keys or passwords.

4. Operator framework: Simplifying application management on Kubernetes

The Operator Framework is an open-source toolkit that extends Kubernetes to streamline the management and operation of complex applications on the platform. It provides tools, libraries and best practices for building Kubernetes operators.

It simplifies application management by providing a higher level of abstraction and automation for managing complex applications on the Kubernetes platform. The operator Framework provides developers with the required tools and resources to ease the process of building, such as:

Operator SDK simplifies operator development by providing project scaffolding, code generation and testing capabilities.

Operator Lifecycle Manager (OLM) handles the operator installation, versioning and upgrades. It simplifies their management across Kubernetes environments.

Operator Hub is a centralized marketplace for discovering and sharing operators, making it easier for users to find and install them.

Integration with Operator Metering provides usage and metering capabilities for Operators, helping developers track resource consumption and costs.

Community collaboration is encouraged by sharing knowledge within the Kubernetes community, fostering innovation and improving the quality of operators.

5. Machine learning

When it comes to AI and data science workloads, Kubernetes plays a crucial role in managing the infrastructure and resources needed for running machine learning workflows. Kubernetes can help streamline ML models deployment and scaling. The system manages resource allocations, schedules workloads, and ensures high availability and fault tolerance.

Kubernetes is increasingly adopted as a platform for running ML workloads due to its scalability, portability and resource management capabilities.

Kubernetes for AI and data science workloads offers several benefits. It enables efficient utilization of computing resources by dynamically scaling the infrastructure based on workload demands. It provides isolation and reproducibility for ML experiments through containerization, making it easier to package and share code, dependencies and data. Kubernetes also facilitates the deployment of complex ML pipelines, where multiple interconnected components and services need to be orchestrated.

Furthermore, Kubernetes integrates well with other tools and frameworks commonly used in the ML ecosystem, such as TensorFlow, PyTorch and Apache Spark. This integration allows for seamless integration of ML-specific features like distributed training, model serving and automated model deployment.

The Kubernetes community recognizes the growing importance of AI and data science workloads and is actively improving support for these workloads. some ways the Kubernetes community addresses AI and data science users are:

- Custom resource definitions (CRDs): Kubernetes introduced CRDs, allowing users to define their own resources for AI and data science workloads, enabling specialized management within Kubernetes.

- Operators and Operator Framework: The Operator Framework simplifies the packaging and management of AI applications by automating deployment, scaling and lifecycle management tasks.

- GPU Support: Kubernetes enhances GPU support, enabling efficient allocation and utilization of GPUs for accelerated AI workloads.

- AI-specific libraries and tools: The Kubernetes ecosystem offers AI-specific tools like Kubeflow, providing end-to-end machine learning workflows seamlessly integrated with Kubernetes.

- Community collaboration and documentation: The Kubernetes community collaborates with AI practitioners. This enables the incorporation of feedback to improve features and provides comprehensive documentation and resources for effective AI workload management.

Transform application management at scale

As Kubernetes continues to evolve, understanding the key trends shaping its architecture is crucial for organizations investing in container orchestration. Softweb Solutions successfully utilized Kubernetes as part of their technology stack in a real-world project. By adopting a microservices architecture and integrating tools like Kubernetes, Azure API Management and Docker, we created a scalable and efficient data ecosystem for their client.

A managed Kubernetes service offers you a convenient and efficient way to leverage Kubernetes for your applications. This reduces complexity, improves operational efficiency and accelerates time to market.

Businesses should adopt Kubernetes for its seamless handling of increased workloads, to ensure uninterrupted service through automatic workload distribution, and to simplify the management of complex microservice architectures.