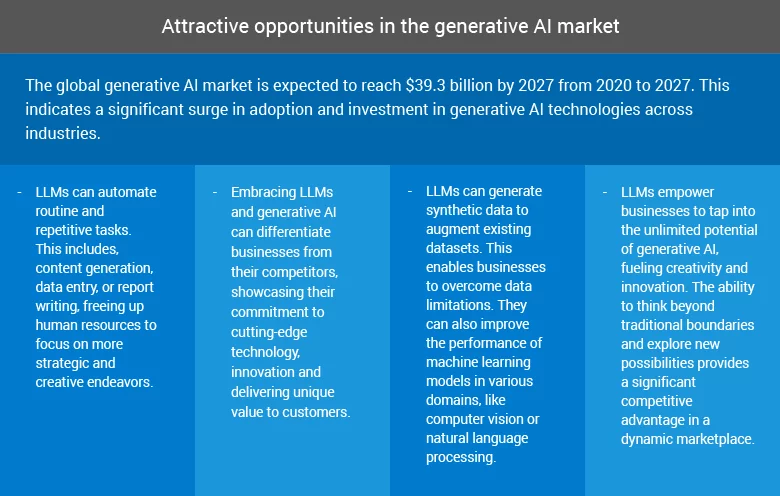

In the realm of artificial intelligence (AI), the evolution of generative models has sparked a wave of innovation and creativity. From transforming the way we generate text to revolutionizing content creation, generative artificial intelligence (generative AI) has been on a relentless journey of growth and adoption.

Generative AI has transformed the way machines understand and generate human-like content. Traditional AI algorithms rely on predefined rules and patterns. Whereas generative AI models can generate original and creative outputs by learning from vast amounts of training data.

There are several generative AI models, such as generative adversarial networks (GANs) and large language models (LLMs). They employ complex algorithms and neural networks to learn patterns, structures and relationships within the training data. Once trained, these models can generate new content that exhibits similar characteristics and style as the data they were trained on.

What are LLMs?

Among the most powerful generative AI models are LLMs, such as OpenAI’s GPT-3, GPT-3.5 and GPT-4. These models have demonstrated remarkable abilities in understanding and generating contextually relevant and coherent text.

LLM can analyze large amounts of text data and learn the statistical properties and patterns of the language. It can then generate text based on the learned knowledge. This allows it to compose original sentences, paragraphs, images, or even entire articles.

58.9% of marketers use AI tools to optimize existing content, including ChatGPT, Anyword, Jasper, Copy.ai, Frase, and Quillbot. – Aira Survey

Integrating generative AI, specifically LLMs, into real-world applications requires careful consideration and adherence to best practices. In this blog, we will explore the key principles of generative AI integration, focusing on LLMs. We will discuss LLM integration challenges and highlight successful case studies. Also, we will provide insights into optimizing the use of LLMs for various applications.

Before we dive into this blog further, you can read about generative AI and its benefits in detail.

Best practices for generative AI integration

- Define objectives clearly

- Model selection and configuration

- Fine-tuning for specific tasks

- Iterative approach

- Human-in-the-loop

- Bias detection and mitigation

Before integrating generative AI, it is crucial to define clear objectives and expected outcomes. Whether it’s generating product descriptions, personalized content, or improving customer interactions, setting specific goals guides the development process. This ensures alignment with desired outcomes.

Select an appropriate generative AI model based on your objectives, data availability and computational resources. Configure the model by fine-tuning its parameters, hyperparameters and training techniques to optimize its performance for your specific use case.

LLMs can be fine-tuned for specific tasks to improve their performance in targeted applications. By training the model on task-specific datasets and optimizing hyperparameters, LLM can adapt and generate text tailored to the specific requirements of applications, such as sentiment analysis, summarization, or question answering.

Adopt an iterative approach to model integration. Start with a small-scale implementation, gather feedback and iterate on the model’s performance and functionality. Gradually scale up the implementation while continuously improving the model.

Incorporate a human-in-the-loop approach to enhance the generative AI system. Combine the strengths of AI-generated outputs with human expertise and judgment. Employ mechanisms for human review, feedback and intervention to ensure the accuracy, relevance, and ethical implications of the generated content.

Generative AI integration, including LLMs, raises ethical considerations, such as the potential for biased or harmful outputs. It is important to implement ethical guidelines and frameworks to address these concerns. Regular audits, bias detection techniques and responsible content moderation are essential for ensuring fairness, transparency, and accountability in LLM-generated text.

Challenges of generative AI integration and how to address them

Scalability and efficiency

LLMs are known for their computational demands and resource-intensive nature. Scaling up LLMs for large-scale applications and real-time requirements poses a significant challenge. Optimizing LLM architectures, leveraging distributed computing and utilizing specialized hardware accelerators can improve scalability and efficiency.

Techniques like model distillation enhance the practicality and efficiency of LLM integration. It involves training smaller models to approximate the behavior of larger ones.

Interpretability and explainability

LLMs are often regarded as black boxes, making it challenging to understand how they generate specific outputs. Enhancing interpretability and explainability is crucial for building trust and understanding LLM-generated text.

Techniques like attention mechanisms, saliency analysis and model-agnostic interpretability methods can shed light on the decision making process of LLMs. This makes their outputs more transparent and accountable

Harnessing the power of generative AI and prompt engineering

Event agenda

- Introduction to generative AI

- Introduction to prompt engineering

- Discussing the use case

- Leveraging generative AI for software development

Real-life use cases of generative AI integration

ChatGPT

OpenAI’s ChatGPT is a prime example of successful LLM integration into a conversational AI application. It has been widely used on various platforms to provide human-like interactions and assistance. ChatGPT demonstrates the capability of LLMs to generate contextually relevant and engaging responses in real-time conversations. It enables improved customer support and virtual assistance.

Read more about ChatGPT integration.

The Washington Post

The Washington Post, a renowned media organization, has employed LLMs to automate content creation. They have integrated LLMs into their newsroom to generate short news updates known as Heliograf. This integration has enabled The Washington Post to augment their news coverage, quickly produce real-time reports and expand their content output with the assistance of LLM-generated text.

The market for AI in content creation, including generative AI applications, is expected to grow from $606 million in 2020 to $2.8 billion by 2026. – Research and Markets

Github’s Copilot

GitHub’s Copilot, powered by OpenAI’s Codex (an LLM-based model), assists developers in generating code snippets and providing intelligent suggestions while writing code. This integration enhances developers’ productivity and streamlines the software development process.

Google Translate

Google Translate utilizes LLMs to generate more accurate and contextually appropriate translations. LLMs excel at capturing language nuances, improving translation quality and aiding cross-cultural communication.

Integrating generative AI: A roadmap to success

Generative AI integration presents immense potential for transforming various industries. By following the best practices mentioned above, organizations can effectively integrate LLMs into their applications and unlock the power of generative AI. By integrating generative AI technologies like LLMs into their workflows, organizations can leverage the power of advanced language generation, content personalization and enhanced customer interactions.

As a leading provider of generative AI consulting services, Softweb Solutions is at the forefront of generative AI integration. Our expertise in developing and deploying cutting-edge AI solutions helps businesses unlock the full potential of generative AI.

Partner with Softweb Solutions to embark on a successful generative AI journey and stay ahead in today’s competitive landscape. Contact our AI experts to learn more about generative AI integration.