As the world becomes more data-driven, it is challenging for a traditional data warehouse to cope with the rising challenges. These issues include the growing volume, variety, and velocity of data. Amidst the growing use of big data, machine learning, and real-time analytics, many businesses and organizations scale their data infrastructure on a modern platform named Azure Databricks.

Built using a powerful Apache Spark engine, Azure Databricks has become the ideal solution for all organizations looking to transform their traditional data systems into flexible, scalable, and efficient data platforms.

Let’s get an overview of data warehouse technologies

- ADLS Gen2 (Azure Data Lake Storage): A scalable and secure cloud storage service optimized for big data analytics.

- Apache Spark: An open-source distributed processing engine for large-scale data processing and analytics.

- Delta Lake: A storage layer that brings ACID transactions, scalable metadata, and data versioning to big data lakes.

- Data Lakehouse Architecture: A unified data architecture combining the best features of data lakes and data warehouses, enabling both analytics and machine learning.

Looking to modernize your data warehouse?

By leveraging Azure Databricks, our certified Microsoft consultants help you streamline data workflows. We optimize big data processing and implement scalable analytics solutions tailored to your data needs.

Why do businesses need to modernize data warehouse

- Scalability issues: Conventional data warehouses often struggle to scale with rapidly growing datasets.

- Slow processing: High-latency batch processing leads to delays in reporting and insights.

- Data silos: Different systems store structured, semi-structured, and unstructured data separately, making it more difficult for data accessibility and integration.

- Limited analytics: Traditional systems are not optimized for advanced analytics and machine learning workloads.

Organizations can address these challenges by modernizing their data warehouse infrastructure with Azure Databricks. This can also help with future-proofing their data architecture.

What is Azure Databricks?

Azure Databricks is a collaborative Apache Spark-based analytics platform optimized for Azure. It simplifies large-scale data engineering, data science, and machine learning. Moreover, it provides a unified platform that integrates seamlessly with Azure services.

Streamlined data integration with Databricks

Softweb Solutions enhanced a manufacturing client’s data integration by implementing Databricks, resulting in improved data processing efficiency, scalability, and actionable insights.

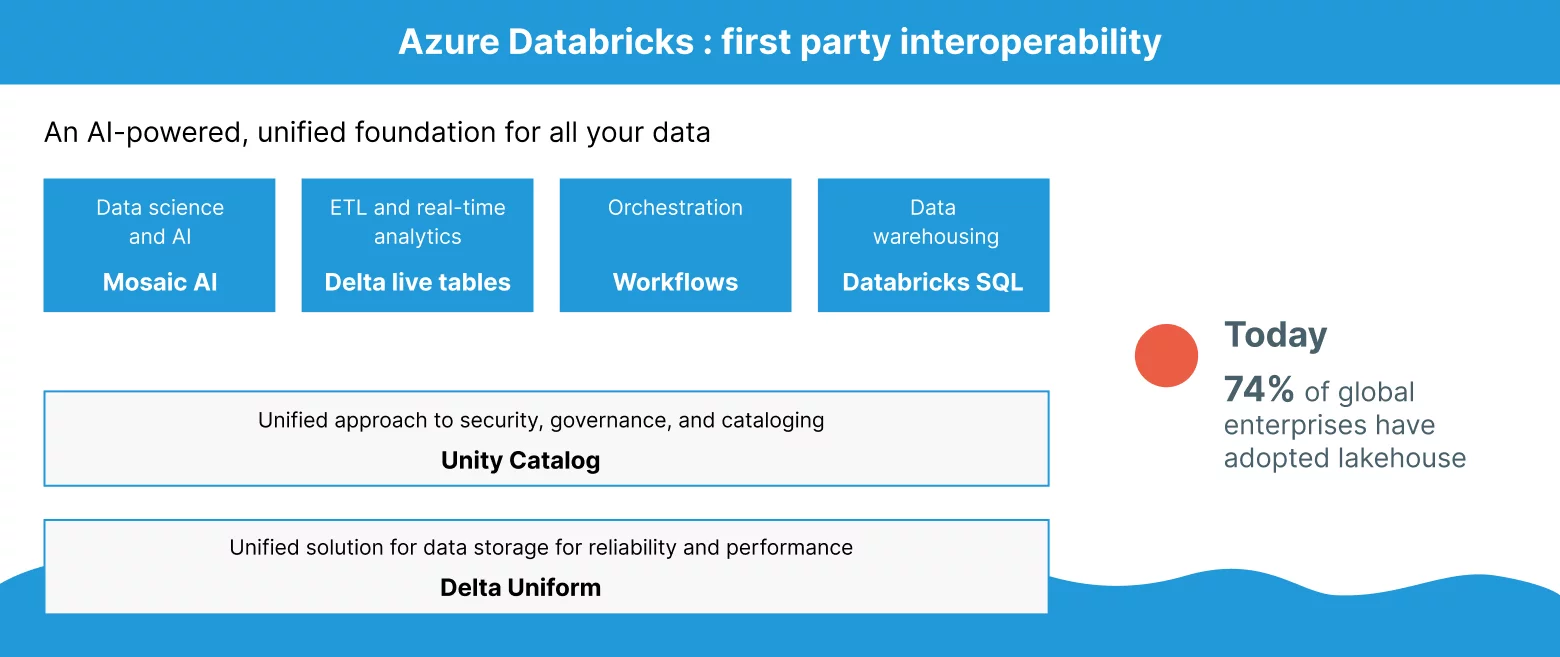

Key features of Azure Databricks:

- Unified analytics: Combines big data processing with machine learning in a single environment.

- Delta Lake: Brings ACID transactions and scalable metadata handling to big data architectures. Delta formats play an important role here.

- Integration with Azure: Works seamlessly with other Azure services such as Azure Data Lake, Azure Synapse Analytics, and Power BI.

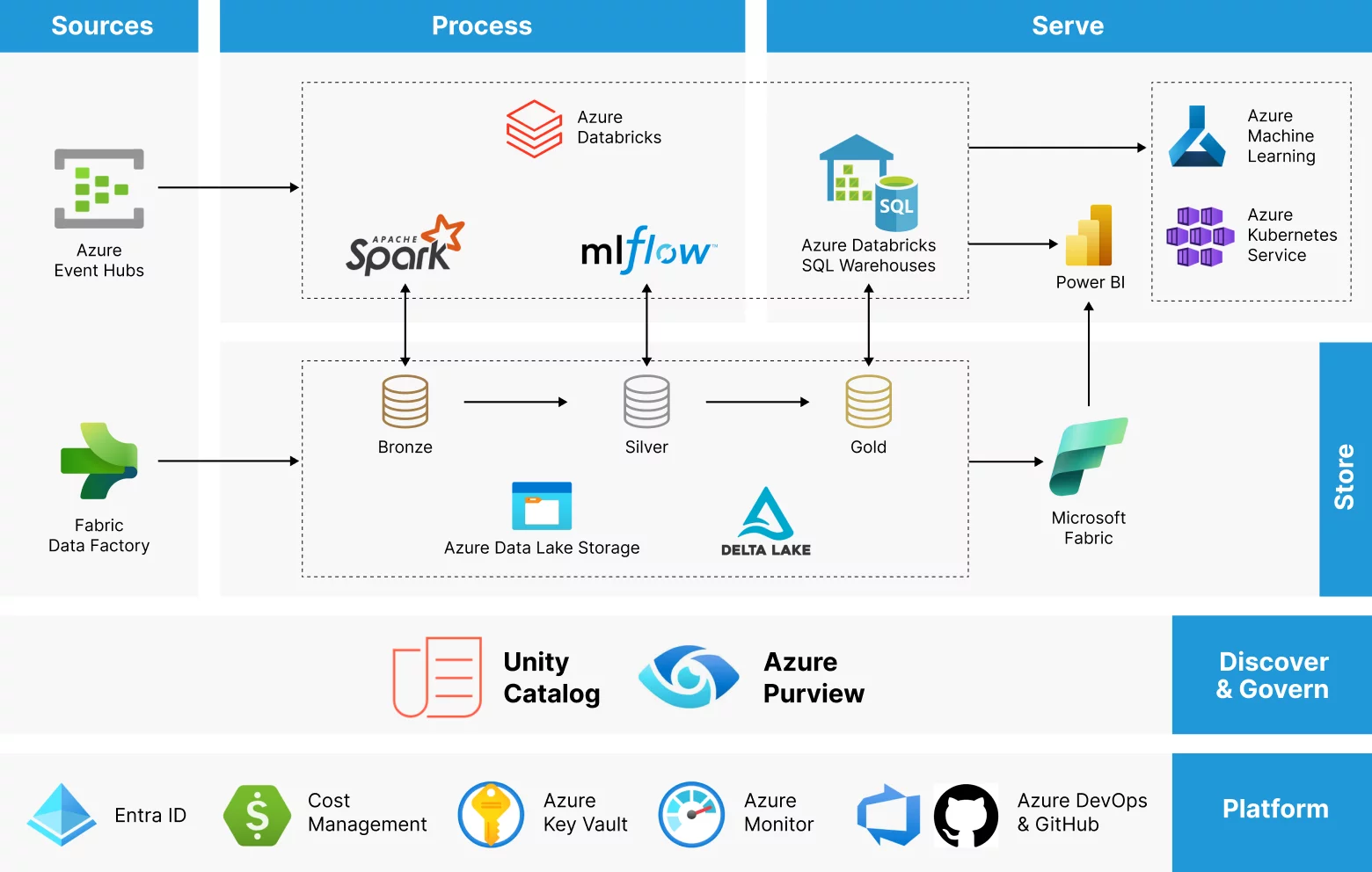

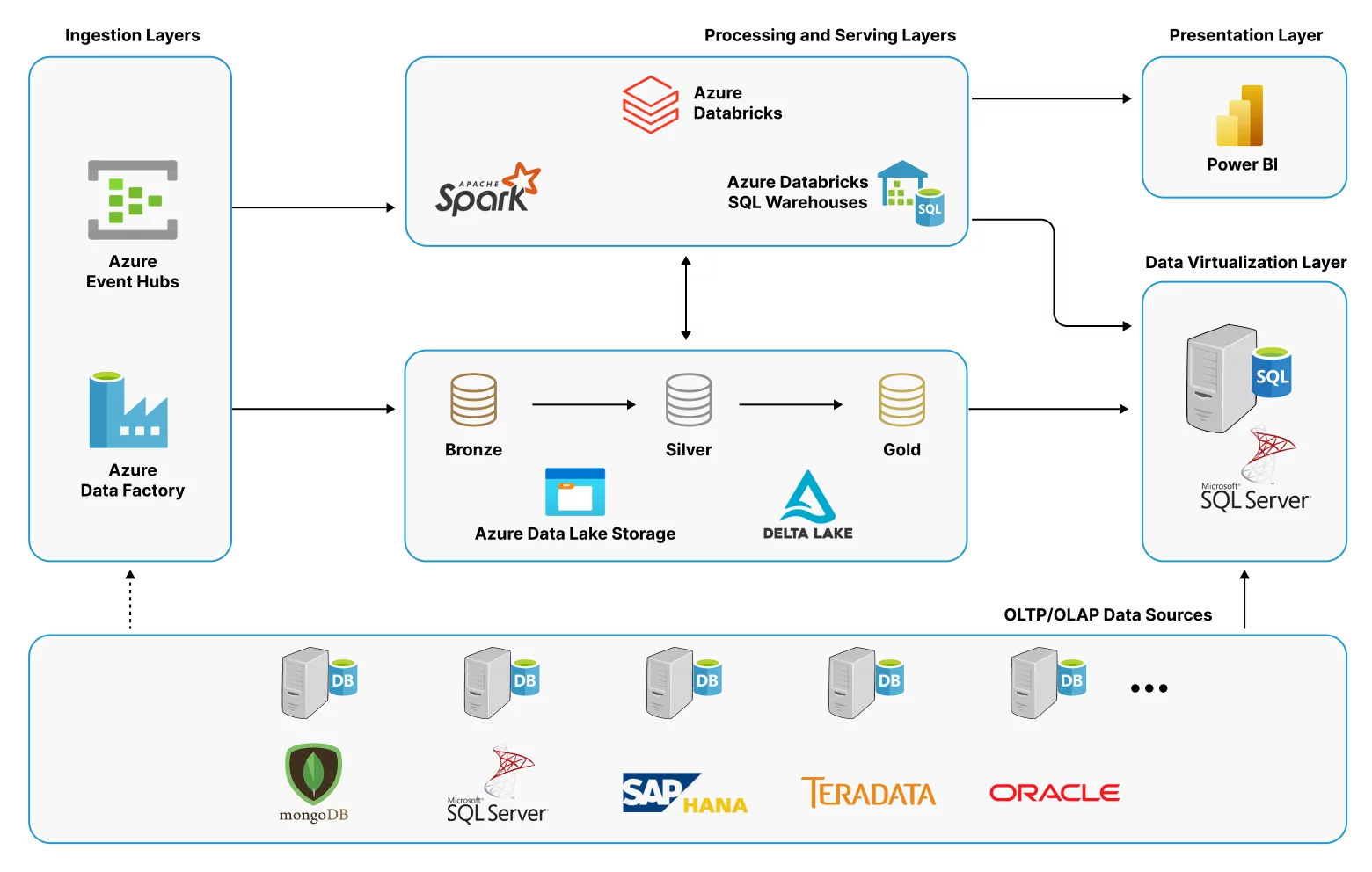

Architecture

Use case: Modernizing a retail data warehouse

For instance, let’s assume that a global retailing organization relies on a traditional data warehouse for its BI and reporting purposes. Their existing infrastructure was built around a traditional SQL-based data warehouse, with batch ETL processing running overnight to load daily sales, inventory, and customer information. As the company expanded globally, as well as when its data increased massively, the following issues arose:

- Slow data processing: The batch ETL jobs took more time to complete, so reports were based on the previous day’s data.

- Poor scalability: As data volumes grew, their data warehouse struggled to handle larger datasets, impacting query performance.

- Lack of real-time insights: The company struggled to optimize supply chains and make quick business decisions in the absence of real-time insights into sales and inventory.

- Siloed data: They stored customer behavior data, web analytics, and social media data in separate systems, making it difficult to perform comprehensive analysis.

To come over these challenges, the company decided to modernize its data warehouse with Azure Databricks and implement a data Lakehouse architecture.

Here’s how they can do it:

1. Unified Data Lake for all data types

- The company should set up an Azure Data Lake Storage (ADLS) to act as a centralized data repository for all types of data (structured, semi-structured, and unstructured). Data batch and real-time data from various sources should be fed into the data lake using Azure Data Factory.

2. Delta Lake for scalable, reliable data storage

- They must implement Delta Lake on top of ADLS to ensure ACID transactions and enable efficient read/write operations. It allows the team to build a single source of truth, merging real-time streaming data with historical data while maintaining data consistency.

3. Real-time data processing with structured streaming

- Instead of waiting for batch jobs to complete, the company can utilize structured streaming in Databricks to process real-time sales and inventory data, enabling them to monitor stock levels, predict demand, and optimize inventory across stores in near real-time.

4. 4.Advanced analytics and machine learning

- Azure Databricks provides the platform to run machine learning models at scale. The company can leverage these models to analyze customer behavior, forecast demand, and recommend personalized promotions.

5. Power BI for business intelligence

- For reporting, the team can integrate Power BI with Databricks SQL endpoints to create interactive dashboards that provide instant insights into sales trends, product performance, and inventory levels. Business users could query the most up-to-date data using SQL, without needing to rely on outdated reports from the previous day.

By modernizing its data warehouse with Azure Databricks, the retail company can achieve significant improvements as follows:

- Faster data processing

- Resource scalability

- Enhancement in analytics

- Cost efficiency

- Unified data access

Why to choose Azure Databricks for your data warehouse modernization?

By using Databricks’ real-time processing, scalable infrastructure, and seamless integration with the Azure ecosystem, organizations can get actionable insights faster, reduce costs, and stay ahead of the competition.

- It can handle large data volumes effectively since it’s using Apache Spark’s computing underneath.

- It consolidates all kinds of data at a single source.

- It can enhance real-time insights with machine learning and structured streaming capabilities.

- Organizations can integrate Azure services like Data Lake Storage, Synapse Analytics, and Power BI to extend Databricks’ capabilities.

- Since clusters can be set to auto scale, they can optimize compute resources and operational costs too.

Conclusion

Modernizing a data warehouse with Azure Databricks can transform the way organizations handle, process, and analyze their data. The retail use case shows the advantages of implementing a unified platform that combines the best of both worlds: big data processing with advanced analytics and machine learning.

Softweb Solutions uses Azure Databricks to modernize data warehouse. We enable efficient integration, scalable big data processing, and real-time analytics. Our experts help businesses unlock deeper insights, improve decision making, and reduce operational complexity with expertise in implementing advanced data pipelines and machine learning models. Talk to our expert data consultants to know more.