For many organizations, managing big data workloads can be complex, time-consuming, and resource heavy. Azure Data Lake Analytics (ADLA) was introduced to address these challenges—offering a serverless analytics service that allowed users to run parallel data processing jobs without worrying about infrastructure. It became a popular choice for teams looking to extract insights from structured and unstructured data quickly and cost-effectively.

However, as cloud technology continues to evolve, so do the tools. Microsoft has officially retired Azure Data Lake Analytics as of February 29, 2024. If you’ve been relying on ADLA, now is the time to evaluate your next steps.

To make it easy for companies like yours, Microsoft has provided clear guidance on how to move forward with more modern, integrated solutions.

Recommended alternatives for Azure Data Lake Analytics

For organizations seeking to process and analyze large volumes of data in Azure, Microsoft recommends transitioning to the following services:

- Azure Synapse Analytics: An integrated analytics service that combines big data and data warehousing. It supports T-SQL, Spark, and .NET, enabling comprehensive data exploration and analysis across data lakes and warehouses.

- Azure Databricks: A collaborative Apache Spark-based analytics platform optimized for Azure. It facilitates data engineering, machine learning, and data analytics.

- Azure HDInsight:A fully managed cloud service that makes it easier to process massive amounts of data using open-source frameworks such as Hadoop, Spark, and Hive.

- Azure Data Factory: A cloud-based data integration service that allows you to create, schedule, and orchestrate data workflows at scale. It supports hybrid data movement and transformation across a wide range of sources.

Recommended migration path from Azure Data Lake Analytics

Microsoft recommends transitioning to Azure Synapse Analytics, a comprehensive platform that unifies big data and data warehousing capabilities. Unlike ADLA, Synapse provides a single environment for querying data using T-SQL or Apache Spark, running machine learning models, and visualizing results with Power BI—all in one place.

Here’s how you can begin the migration:

1. Assess your existing ADLA workloads

Review your current jobs, scripts, and data pipelines to understand which components need to be migrated or restructured.

2. Move data to Azure data lake storage Gen2

Azure Synapse is designed to work seamlessly with Azure Data Lake Storage Gen2. If your data is already stored there, you’re ahead. If not, our experts can help you migrate your data to Azure data lake storage for optimal performance.

3. Rewrite U-SQL scripts

Since U-SQL is not supported in Synapse, you’ll need to rewrite your analytics logic using T-SQL, Apache Spark, or Data Flows, depending on the workload.

4. Utilize Synapse Pipelines

Avail Synapse Pipelines to rebuild any ETL or orchestration logic that previously ran in ADLA. These pipelines support code-free and code-based transformations, integrating smoothly with various data sources.

5. Validate and optimize

Test your new workloads in Synapse, optimize for performance, and ensure that results match your original analytics output.

A deep dive into Azure Data Lake Analytics

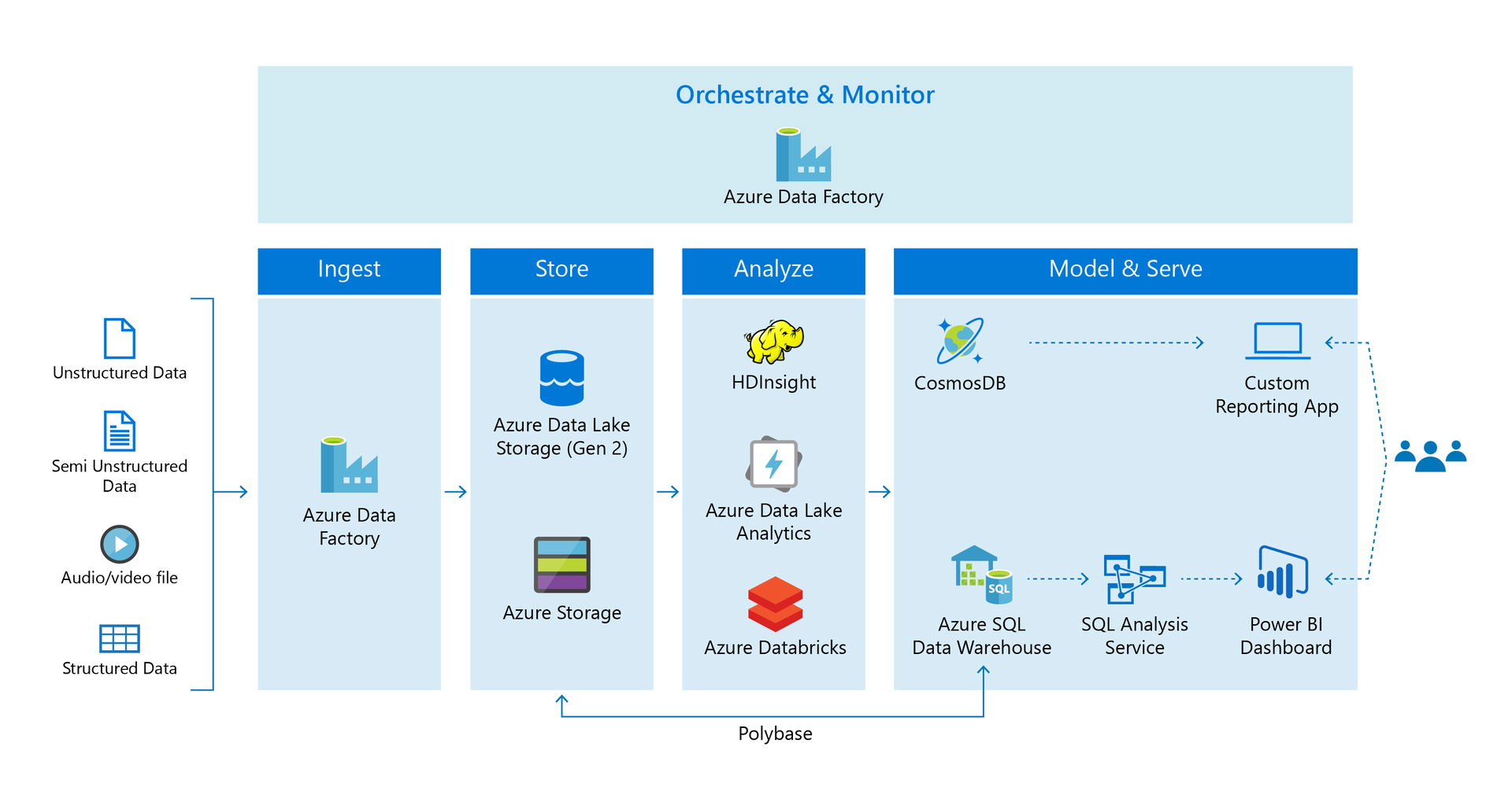

Azure Data Lake comprises primarily of three components:

1. Azure Data Lake Analytics

2. Azure Data Lake Store

3. HDInsight

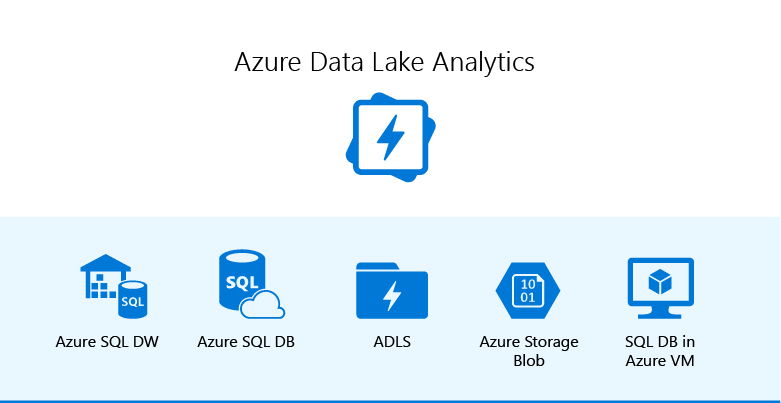

Azure Data Lake Analytics (ADLA) is an on-demand, HDFS compliant real-time data analytics service offered by Microsoft in the Azure cloud to simplify big data analytics, also known as ‘Big Data-as-a-Service’. In other words, it is an Apache-based distributed analytical service, built on YARN (Hadoop’s framework) that allows users to process unstructured, semi-structured and structured data without spending much time on provisioning data clusters.

The Azure Analytics service can provide you with the ability to increase or decrease processing power as per job and enables you to run extensively parallel data on-demand. Additionally, the platform also supports a new big data processing and query language named U-SQL, apart from R, .NET and Python. However, the key component of this service is ADLAU (Azure Data Lake Analytics Unit) also known as AU, which defines the degree of parallelism.

Consequently, Azure Data Lake Analytics is the next generation of data services, which makes it possible for you to rest your worries of infrastructure or resources and focus solely on data query and job services. Hence, it is also known as a ‘Job-as-a-Service’ or ‘Query-as-a-Service’.

What is Azure Data Lake Analytics used for?

Azure Data Lake Analytics is mainly used for distributed data processing for diverse workloads, including ML, querying, ETL, sentiment analysis, machine translation and likewise.

For instance, consider you are handling terabytes of data and you want to expedite the process of distributing data in the form of clusters. Now, you already have the information set for processing the data, so all you have to do is data segregation and then process the segregated data. That’s it. But, do you know what the simplest way to do it is? Azure Data Lake Analytics. Yes, it is the best-in-class distributed data processing service available in the market with a high-abstraction of parallelism and distributed programming.

Apart from batch processing, what else can you do with Azure Data Analytics:

- Sharpen the debug and development cycles

- Set up a wealth of data for insertion into a Data Warehouse

- Develop extensively parallel programs seamlessly

- Process scraped web data for analysis and data science

- Debug failures and optimize big data without a hitch

- Process unstructured image data using image processing

- Virtualize data of relational sources without moving the data

Why Azure Data Lake Analytics adoption is on the rise

Do you know what every organization wants to do with data? The answer to it is pretty simple; every enterprise primarily wants to do two things with the data – firstly to store the umpteen amount of data and secondly to query the stored data. But it is not as simple as it sounds.

With the explosion of an untold amount of data, the existing storage solutions, IT infrastructure and the hardware are falling short of shear capacity to store the data and process it on-demand. This is one of the reasons why digital platforms like Azure Data Lake Analytics are on the rise not only amongst large organizations but also among start-ups.

There are several reasons why many SMEs, startup businesses and even consumers have started embracing Azure Data Lake Analytics data service. And one of the reasons is to get rid of the headache of maintaining, installing, configuring and administering servers, infrastructure, clusters, or virtual machines (VM). Azure data analytics platform can seamlessly process the data regardless of the place of its storage in the Azure cloud.

What makes Azure Data Lake Analytics stand out from the rest

- HDFS compatible

- Limitless scalability

- Massive throughput

- Low latency rewrite access

- Pay-per-job pricing model

- Dynamic scaling

- Enterprise-grade security

- Only one language to learn i.e. U-SQL

Also, with Azure Data Lake Analytics in place, you will only have to pay per job instead of per hour, and merely owning the Azure Data Lake Analytics account will cost you nothing, which is quite exceptional in the big data space. Not only this, but you will not even be invoiced for the account unless you run a job.

Get to the next level of data analytics

With continuous innovation to address the evolving needs of relational database services, analytics and batch processing for workloads, Microsoft offers consumers’ ability to make smarter business moves and execute well-organized operations ranging from real-time IoT streaming scenarios to business decision support systems. If you are looking for a robust data platform to manage and scale all your data as per your business’ modern needs, then get in touch with Softweb’s experts today.